Copyright (c) Hyperion Entertainment and contributors.

DMA Resource

Author

Jamie Krueger, BITbyBIT Software Group LLC

Copyright (c) 2019 Trevor Dickinson

Used by permission.

DMA Engine

Some hardware targets include a DMA engine which can be used for general purpose copying. This article describes the DMA engines available and how to use them.

Hardware Features

The Direct Memory Access (DMA) Engines found in the NXP/Freescale p5020, p5040 and p1022 System On a Chip (SoC)s, as found in the AmigaONE X5000/20, X5000/40 and A1222 respectively, are quite flexible and powerful. Each of these chips contains two distinct engines with four data channels each. This provides the ability to have a total of eight DMA Channels working at once, with up to two DMA transactions actually being executed at the same time (one on each of the two DMA Engines).

Further, each of the four DMA Channels found in a DMA Engine may be individually programmed to handle either; a single transaction, a Chain of transactions, or even Lists of Chains of transactions. The DMA Engines automatically arbitrate control between each DMA Channel following programmed bandwidth settings for each Channel (typically 1024 bytes).

This means that after completing a transfer of 1024 bytes (for example), the hardware will consider switching to the next Channel to allow it to move another block of data, and so on in a round-robin fashion. If all other DMA Channels on a given DMA Engine are idle when arbitration would take place, the hardware will not arbitrate control to another Channel, but simply continue processing the transaction(s) for the Channel it is on.

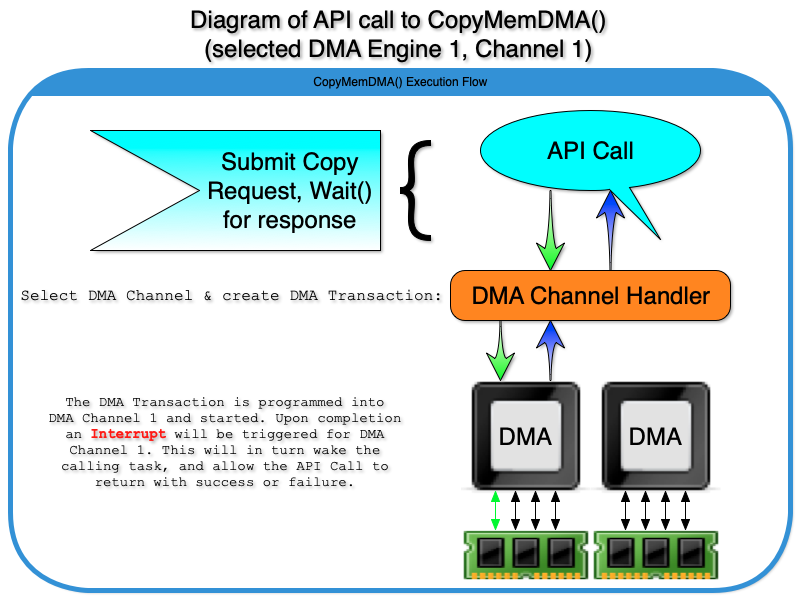

DMA Copy Memory - Execution Flow Diagram

What a call to perform a DMA copy does internally

As shown in the above diagram, when the user makes a call to request a memory copy be performed by the DMA hardware, the next available DMA Channel is selected for use and a DMA Transaction (source, destination and size) is constructed. The DMA Transaction is then programmed into the DMA Engine which owns the available DMA Channel.

At this point the calling task will Wait() until it hears the transaction has been completed. It will then return to the caller with the result. This provides a basic blocking function, which only returns to the caller once that data has been copied. This single tasking behavior is the simplest to use and what is normally expected by most applications using a memory copy function.

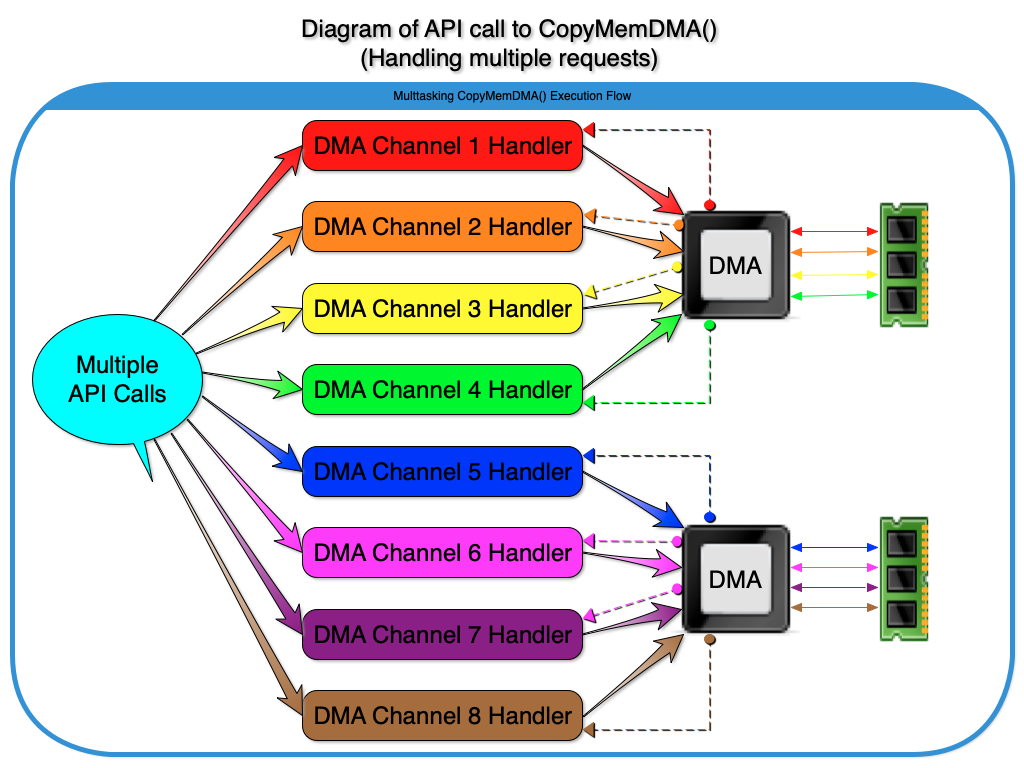

Diagram of multitasking DMA Copies

How multiple simultaneous DMA copies are handled

When multiple user calls requesting a DMA copy arrive at once, each one is handed to a dedicated DMA Channel handling task for processing. As the diagram above demonstrates, there are two separate DMA Engines available, each with four channels that may be programmed at the same time. The hardware will then arbitrate the actual data move across these channels according to their respective bandwidth settings (usually 1024 bytes).

In the diagram above, a separate color indicates a distinct data path from the caller through the DMA hardware to the system RAM. A dashed line of the matching color indicates an Interrupt line signaling the respective DMA Channel Handler with the completion of the transaction. The handler task then signals back to the original caller, which returns to the user with a success or failure result.

All eight DMA Channels can handle each a single block transaction or an entire chain of block transactions before it signals completion and returns to the original caller. If all eight DMA Channels are busy processing their requested transactions when further DMA copy requests arrive, they will each be assigned a DMA Channel to wait on (managed via a Mutex lock on each Channel) and will block until allowed to add their DMA transaction to the Channel's queue.

fsldma.resource

The fsldma.resource API is provided automatically in the kernel for all supported machines (Currently the AmigaONE X5000/20, X5000/40 and A1222).

The FslDMA API

The API provided by the fsldma.resource current consists of:

- Copy Memory functions

Copy Memory Functions

Three API calls make up the FslDMA API; CopyMemDMA(), CopyMemDMATagList() and CopyMemDMATags().

BOOL CopyMemDMA( CONST_DMAPTR pSourceBuffer, DMAPTR pDestBuffer, uint32 lBufferSize );

BOOL CopyMemDMATags( CONST_DMAPTR pSourceBuffer, DMAPTR pDestBuffer, uint32 lBufferSize, uint32 TagItem1, ... );

BOOL CopyMemDMATagList( CONST_DMAPTR pSourceBuffer, DMAPTR pDestBuffer, uint32 lBufferSize, const struct TagItem *tags );The first call, CopyMemDMA(), attempts to perform a "blocking" (does not return to the user until after the requested transfer has either succeeded or failed) operation. A value of TRUE is returned upon success and FALSE if an error occurred. The CopyMemDMA() call will automatically fall back to using an internal fast CPU copy if the requested size is too small to be efficient, or an error occurred in the DMA transaction.

In theory any contiguous block of memory from 1 Byte up to 4GB in size may be transferred using any one of these calls. In practice the DMA hardware can directly accept memory blocks up to FSLDMA_MAXBLOCK_SIZE (or 64MB - 1 Byte). Therefore any data blocks greater than FSLDMA_MAXBLOCK_SIZE will automatically be sent to the DMA hardware in a series of smaller chunks. For maximum efficiency transfer sizes should be at least 256 Bytes in size and be an even multiple of 64 Bytes. Odd sizes are handled by the hardware but will degrade performance.

If you are transferring many large blocks of data in series and wish to manually send them to the FslDMA API's memory copy functions one block at a time, then setting your block sizes to FSLDMA_OPTIMAL_BLKSIZE (or 64 MB - 64 Bytes) will provide the fastest transfer speeds will the least amount of CPU overhead.

Further reference - The fsldma.resource AutoDoc

See the [fsldma.resource AutoDoc] file for more details on each API call.

Example usage

# <interfaces/fsldma.h>

// Obtain the fsldma.resource

struct fslDMAIFace *IfslDMA = IExec->OpenResource(FSLDMA_NAME);

if ( NULL != IfslDMA )

{

// Allocate a couple of test buffers (fill the source with data)

uint32 lSize = FSLDMA_OPTIMAL_BLKSIZE;

CONST_APTR pSrc = IExec->AllocVecTags(lSize,

AVT_Type, MEMF_SHARED,

AVT_ClearWithValue, 0xB3,

TAG_DONE);

APTR pDest = IExec->AllocVecTags(lSize,

AVT_Type, MEMF_SHARED,

AVT_ClearWithValue, 0,

TAG_DONE);

if ( (NULL != pSrc) && (NULL != pDest) )

{

// Call IfslDMA->CopyMemDMA() to perform the memory copy using the DMA hardware.

// We use full 64-Bit values to pass in the source and destination to CopyMemDMA

// so we need to use a "double cast" on the 32-Bit pointers returned by

// AllocVecTags() in order to properly pass in fully extended 64-Bit pointers

// (see <resource/fsldma.h> for more details)

if ( TRUE == IfslDMA->CopyMemDMA((CONST_DMAPTR)(uint32)pSrc,

(DMAPTR)(uint32)pDest,

lSize) )

{

// Success - Do something with the copied data

}

// Free our test buffers

IExec->FreeVec(pSrc);

IExec->FreeVec(pDest);

}

}Obtaining the fsldma.resource

Breaking the above example down the first thing we do is include the interface header for the fsldma.resource and obtain the resource itself.

#include <interfaces/fsldma.h>

struct fslDMAIFace *IfslDMA = IExec->OpenResource(FSLDMA_NAME);Allocating DMA Compliant Memory

Once we have successfully obtained the DMA resource we can directly use its API. The next step we need to do is to allocate some memory which we know is DMA compliant for use by the resource. This can be accomplished by using the IExec->AllocVecTags() function together with a couple of MMU functions. Together they allow you to ensuring the memory that is returned is properly aligned, contiguous, cache-inhibited and coherent.

We use the Tags version of the allocate memory function first so we can allocate a block of memory for our source buffer and fill it with some test value (in this case the byte value 0xB3).

We also need a destination buffer for our test. Since it only needs to start out being cleared (filled with zeroes), we can use the simplest form of the allocate memory function here as the allocated memory is cleared by default.

Performance

Testing DMA memory copies vs their CPU based equivalent indicates that the DMA hardware on all three models (X5000/20, X5000/40 and A1222) move data approximately two to three times faster than the CPU does so alone.

It should also be noted that these tests were performed when the CPU was effectively idle. CPU memory copy operations scale down roughly equal with the CPU actively scaling up. So the more the CPU is doing, the longer it takes to complete the memory copy.

Conversely, DMA memory copy operation times are far more predictable as they use the same amount of (minimal) CPU overhead for each copy, and the actual time it takes the DMA hardware to complete the transaction can be calculated to range from all the DMA Channels being idle, to all DMA Channels being busy at once and data moves arbitrating between channels every 1024 bytes.

Optimal use of the DMA Engine

To gain the best performance from the DMA Engines, block sizes of 256 bytes or more should be used. Also, if possible the size should be an even multiple of at least 4 bytes.

Additionally the memory blocks (source and destination) should be aligned to start on a 64 Byte boundary. Alignment requirements are one of items taken care of for you by the DMAAllocPhysicalMemory() function.

The DMA Engine can and does handle misaligned and odd sized data blocks by first shifting the minimum required bytes to correct the alignment, then taking smaller chunks of data until the largest chunk of the block can be moved. It can also handle copies of as little as one byte (don't do this).

However, this does degrade performance.

In general, the larger the data block the better.